Lately, I have been wondering if Bikram would be a good domain for a robot to negotiate. However, this morning as I was going through the sequence on automatic – rather like a robot- when I came back to myself to find my body not doing what it was supposed to be doing, I realised there are so many more variables in the room that I just hadn’t considered.

So, what would I need in order to make a bikram robot or bikbot? (I lolled when I thought of that.)

Defining the closed world of our bikbot

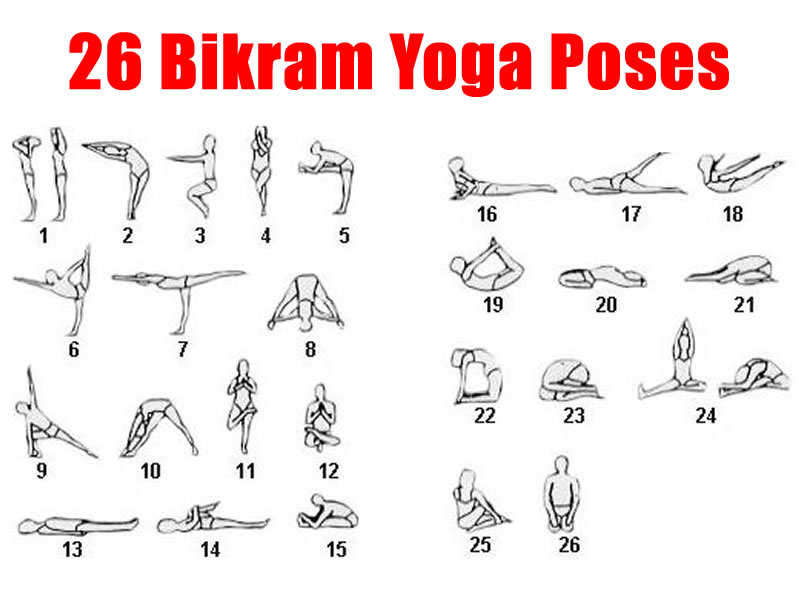

Bikram yoga is a set sequence of 26 asanas, and we hold each pose for about a minute. It’s a dialogue-led class which means the teacher stands at the front of the class and speaks a standard script like a mantra – it is a script the teacher learns by rote. As a student, I follow the teacher’s instructions and do exactly as I am told and watch the shapes that I make in the mirror.

At a first glance, it seems to be something I could very easily automate, sometimes it makes me think of robots in car factories repeating the same sequence over and over.

It is hot 42c in the studio which makes me feel euphoric and my body hot enough to be bendy. I enjoy repeating the same movements each time which depending on how I am feeling that day makes me think of myself either as a robot, a duracell bunny, or a dancer. Lots of dancers do Bikram and it is lovely to practice next to such elegance and precision.

I rarely ever miss out a pose or need to stop. I rarely fall out an asana or lose my balance in the standing sequence, if I do it is because there is something on my mind or some agitation which is lingering in my body that makes me feel unbalanced. On good day though, I feel like I am on fire and that all things are possible. There is a real mind-body connection in the hot room which begs the question: How would that work with the bikbot which doesn’t have a mind or emotions or consciousness? And, would I want to replicate that? After all, isn’t that the idea behind artificial intelligence?

Bikbot hardware

Like Hiroshi Ishiguro, I would make the bikbot just like me in order to test out several theories I have about my body. For example, I have short arms, they are not T-Rex arms, but they are shorter than the script allows, so in Padangusthasana or toe stand when I am instructed to straighten my back and just allow my fingertips to support me until I gain my balance, I can’t do that. My hands come straight off the floor and dangle by side. It’s only as my core has gotten stronger and I can hold myself up without my hands, that I came to realise that my hands actually don’t reach the floor.

I would definitely make adjustments to the bikbot. I would want to explore my hips as I can only do the full expression of garudasana or eagle pose on one side as my hip never quite went back to normal after twisting it out of its socket when pregnant so my leg won’t wrap completely on one side. I would explore weight loss or gain and it’s influence on performance , as well as adjusting levels of flexibility given the time of day at which the bikbot would be practising. I would collect the data and see if any of my theories hold up in the matrix (3D world), that is assuming the bikbot could manage the heat and humidity of the room without short circuiting.

Bikbot software

At first I thought that the bikbot could simply, like Siri, hear a command such as Dandayamana Janushirasana and then pull up the relevant code and perform that asana. But, what if the teacher didn’t say that command exactly right or say all the words in the programmed amount of time? What if the teacher went off on a tangent or stopped the class in order to help someone? This is what is very interesting about Bikram’s McYoga approach. It may seem that the sequence, the class and everything is the same. But nothing is ever the same, we are different everyday, and every teacher has their own spin and brings their own experiences to reciting the script which makes every class unique.

Then I thought that the teacher could press a button to tell the bikbot that it must move onto the next asana, but that again is too simple an approach and actually changes the way the teacher does the job of teaching, which is what can happen when designing technology. We don’t want our bikbot to change the way the yoga class is done. Also, it is a yoga practice not a yoga performance, so the bikbot has to function in a human way which means accommodating others.

Bikbot deep learning

I decided that the bikbot has to learn how to behave whatever happens and so has to have an ability to approximate the script, and have an ability to understand so that it can listen to a new script and respond appropriately. For this understanding to happen, I would need to use machine learning. Machine learning is about learning some properties of a data set – in this case the 90 minute dialog of the Bikram class and then testing those properties against another data set – another 90 minute dialog. It should be the same, but because we are all human, the script is delivered differently each time, and teachers add or miss out sections based on the day.

I have done bikram yoga now for four years, on average four times a week, around 48 eight weeks of the year. So, I have heard the script and practiced bikram approximately 768 times which is 69,120 minutes or 1,152 hours of listening and practising. My goodness, I have been busy.

The question is, is this enough time and is 768 data sets enough for the bikbot to learn bikram yoga so that it could learn to respond appropriately when taking a bikram class?

I would need some sort of deep learning algorithm which means that a human, me, rewards or reinforces the learning so that the bikbot knows it is classifying information correctly, and as a yogi I would definitely want to train my bikbot in the studio in situ, so that I could identify the fuzzy areas for us to focus on when organising the training data, but that would be difficult to do without disturbing everyone else in the studio.

Bikbot behaviour

Being respectful of others is important in the studio, along with the other social niceties such as training the bikbot to lay out its mat so it doesn’t block someone else, how to greet people and ask how they are, understand which direction the room is lain out in order to face the right way and not point its feet at the teacher, as this is considered rude in bikram yoga.

Slightly tangentially: How would it emulate breathing? It needs to be doing the right thing during the breathing exercises at the beginning and end. Would it need some inflatable lungs? Or would it perform them without breathing? But how could it? And, this is only the start, there are many more considerations to make my bikbot human enough to perform a sequence of repeating actions, and here we are once more:

Often we train humans to behave like robots whilst trying to get robots to emulate human limitations in order to become less robotic. That said, we invented robots to repeatedly and consistently perform actions without making any mistakes or getting tired. Conversely, the point of bikram for me is to get tired, to stress my muscles so that, paradoxically I feel stronger, calmer, and hotter, in order for me to be in a mentally different place to where I started.

Would I want my bikbot to go on a similar journey in order for it to understand my human conditioning and hopefully give me some insight into my hidden depths? Or, do I still believe that a computer cannot tell me anything about myself that I do not already know?

Scientist that I am, I would never say never even though I know that there’s a lot of myth making in machine learning. That said, I am always ready to be surprised and having a bikbot might be nice, it could help me round the house, freeing up more time for bikram.

Namaste!

One comment