The words I was weaving, giving and receiving with those lovely women in the audience, were also a conversation I was having with myself.

Continue reading

| fascinated by how people use technology & vice-versa…

The words I was weaving, giving and receiving with those lovely women in the audience, were also a conversation I was having with myself.

Continue reading

I began ‘demystifying’ AI after attending a talk where the speaker, influenced by a ‘techbro’ podcaster, segued into a sci-fi speech about ChatGPT breeding robots.

Continue reading

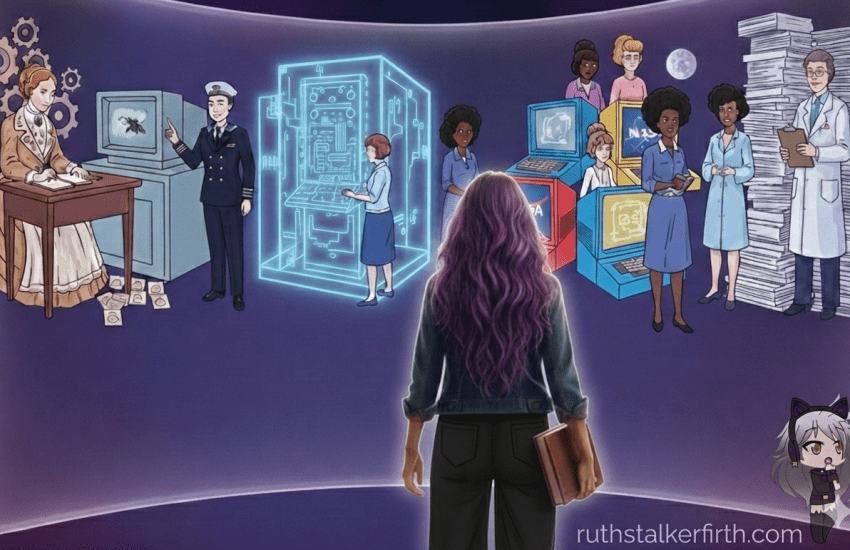

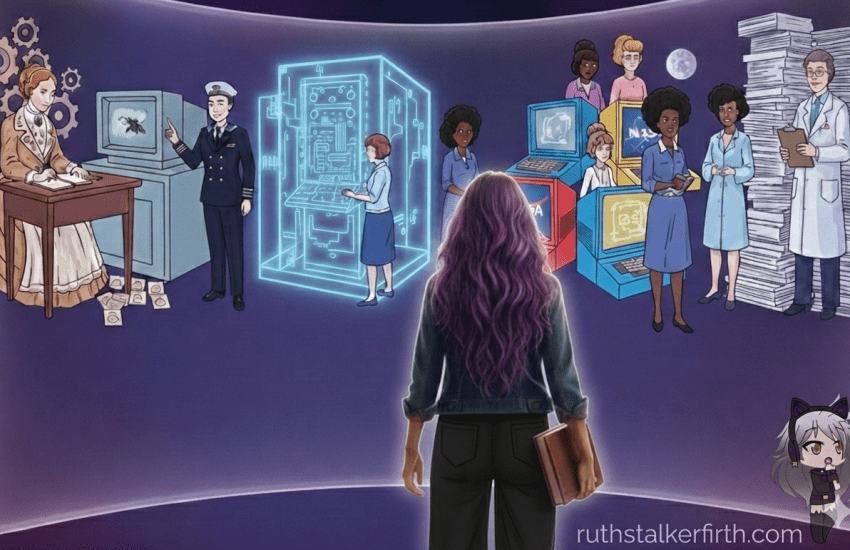

I find that time spent with other women is very powerful and whilst this talk may seem to be about the past, it really is an invitation to the future.

Continue reading

Carrying a lantern,

I walk through my words, past echoes of unfinished drafts,

I sing over the bones of my blogs to find what I have been searching for

– myself.

In a nutshell, I think that AI has been touted as the answer to everything when in reality, it takes a lot of code, people, data and electricity and it is highly designed. It does not update itself or modify itself, nor can you train it as an end user.

Continue reading